Fine-Tuning PyTorch Vision Models

Comparison of PyTorch CV Models on DeepFashion Dataset

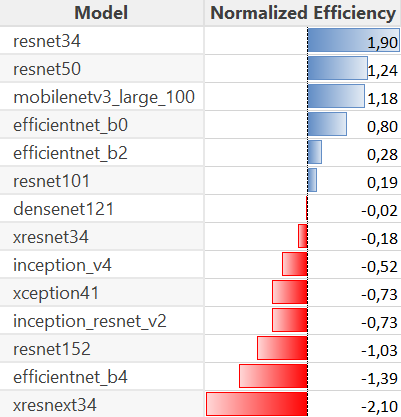

After the surprising Image Classification results with zero-shot CLIP, I’ve run a test over a number of deep computer vision architectures from torchvision, fastai and the awesome timm library by Ross Wightman.

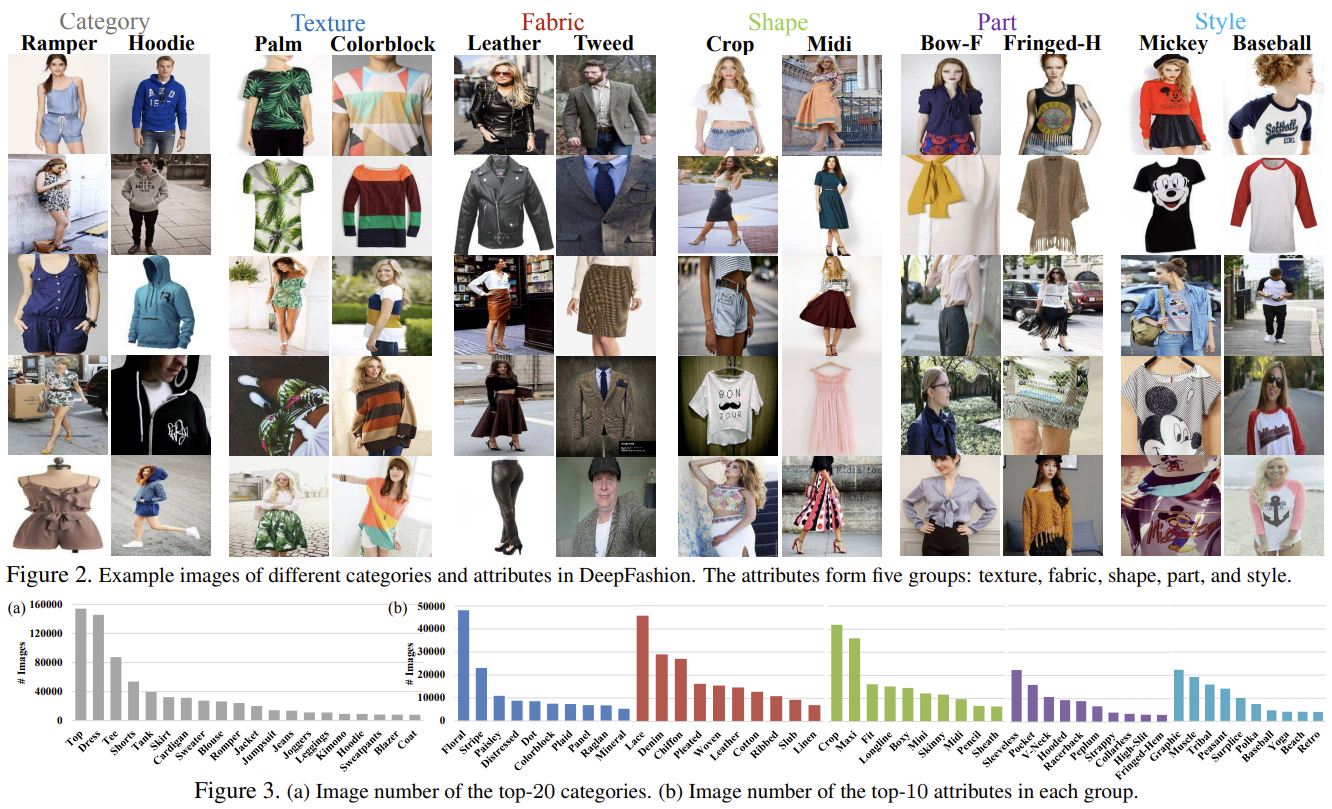

I’m using a 20-class subset of DeepFashion with around 100k images as the dataset. To accomodate memory requirements of different architectures, I’ve set batch_size to 32 (this leaves room for improvements in smaller models). All models are pretrained on ImageNet, are built with a new initialized head and subsequently fine-tuned on DeepFashion for one epoch with frozen weights (new head only) and three epochs with unfrozen weights.

Training took on average ~20 minutes per epoch for all models on my hardware, so around 1:15h per model (the first epoch with frozen weights is generally a bit faster, than the others). The full run took around 18 hours in total. Eventually, I might do a few more runs, just to get a feel for the actual distribution of results. Here are the results:

| Model | Library | Accuracy | Training per epoch (mins) |

|---|---|---|---|

| resnet34 | torch | 0.428767 | 12:34 |

| resnet50 | torch | 0.441164 | 18:08 |

| resnet101 | torch | 0.446995 | 24:50 |

| resnet152 | torch | 0.448151 | 32:15 |

| xresnet34 | fastai | 0.326014 | 12:44 |

| xresnext34 | fastai | 0.255726 | 15:24 |

| efficientnet_b0 | timm | 0.382433 | 13:39 |

| efficientnet_b2 | timm | 0.385690 | 16:47 |

| efficientnet_b4 | timm | 0.360475 | 24:01 |

| densenet121 | timm | 0.395409 | 20:14 |

| inception_v4 | timm | 0.395409 | 23:00 |

| inception_resnet_v2 | timm | 0.405652 | 25:18 |

| mobilenetv3_large_100 | timm | 0.385007 | 11:40 |

| vit_base_patch16_224 | timm | failed | n/a |

| xception41 | timm | 0.389262 | 23:40 |

I was a little surprised, how well the good-old ResNets perform in this setting. Even the smaller ones. Certainly in terms of efficiency (cost/time to accuracy), they are a great choice. I guess, it’s partly due to the training setup (dataset, hardware, batch size, epochs …), that some models didn’t perform better. But for my use case, this was quite informative.

I’m investigating, why the timm-ViT model failed to run my benchmark and also, how to run the same test with one of the pretrained CLIP vision models (ViT or ResNet).

Update:

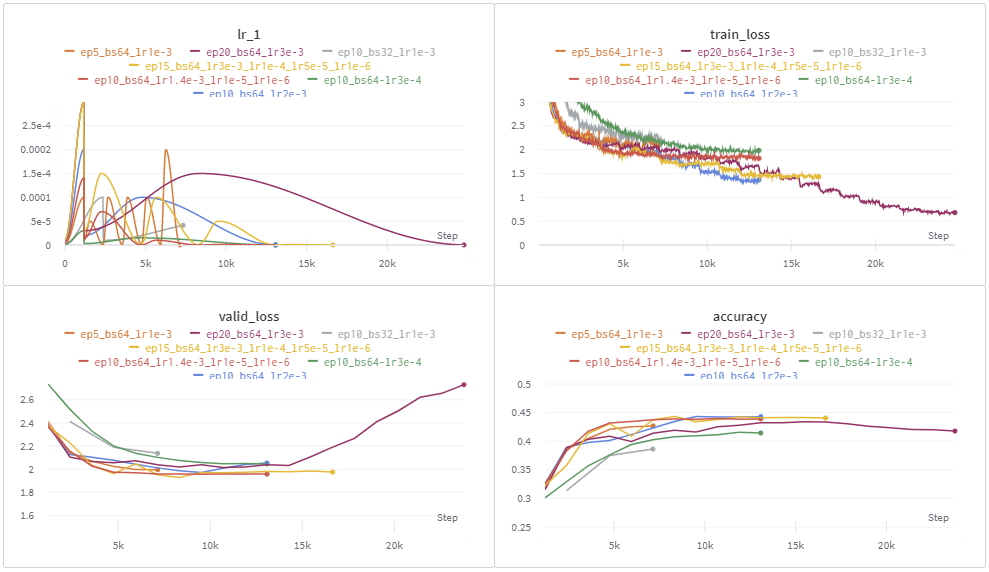

I’ve wanted to try Weights and Biases for some time now, and this project has provided me

with a great opportunity. After comparing architectures, I’ve run a couple of (parallel) experiments locally and in Colab

to compare ResNet34 with different Learning Rate Schedules on the same dataset. Please see

here for more details.