Embedded AI

CLIP running on RaspberryPi

With CLIPs impressive Image Classification capabilities, wouldn’t it be nice, to use them on embedded devices?

But getting Pytorch and other dependencies to run on small ARM CPUs has to be a major challenge, or?

And pretrained CLIP is certainly not a model made for embedded devices, like MobileNet. This can’t fit into memory, can it?

Lets dig out the old RaspberryPi 3 from some half forgotten box and order a camera module on Amazon for < 10€.

The capture is actually quite impressive for a cheap, embedded solution. Lighting might need some improvements, but resolution and focus should provide good material for detection.

Fast forward pytorch & clip setup steps...

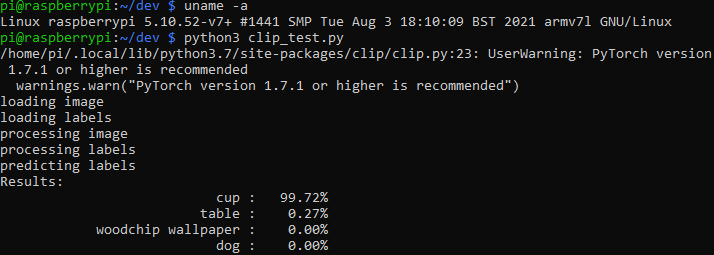

Let’s try to get some CLIP demo code running on the Pi:

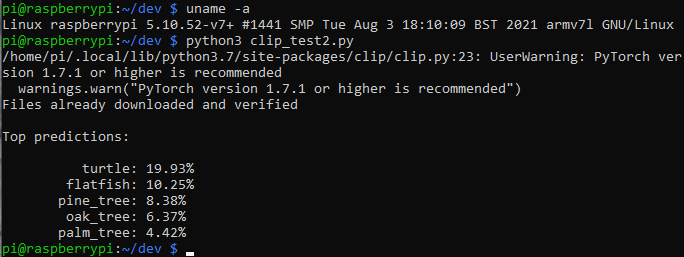

And now zero shot predict the (resized) camera output:

Next up: image classification on real time stream from the camera. Let’s see some latency measurements for clip.

Not actually expected this to work... #openai #clip (RN101) inference on #raspberrypi 3. What if we'd connect the camera module though🤔 #embeddedclip pic.twitter.com/GniFbQvOll

— Jonathan Rahn (@rahnjonathan) August 26, 2021